Stone of Madness

Misinformation research provides an academic veneer for political propaganda

The painting above, by Dutch painter Jan Sanders van Hemessen in the 16th century, depicts a man undergoing a rather violent procedure to remove a stone from his brain via a procedure called trephination — the intentional creation of a small hole in the skull. Trephination to remove a “stone of madness” from the head was practiced in medieval times as a supposed cure for “madness, idiocy or dementia”, typically by “medical quacks [who] roamed the countryside offering to perform the surgery.”

Madness, trephination, and self-appointed surgeons roaming the countryside looking for patients of course has me thinking about the recent attention to the academic subfield of misinformation studies. Today, I introduce several arguments about misinformation studies that I’ll be developing in depth here at THB in coming weeks and months as I work on my new book.

They are:

Some experts claim to have unique access to truth in the midst of contested scientific or empirical claims among legitimate experts. Their asserted ability to identify truth from contestation empowers them to also assert their ability to identify misinformation.1 There is however no shortcut to truth and there can be no special group of scholars who can ever discover such a shortcut.2

Such experts claim that misinformation among the public and policy makers is correctable based on a combination of the experts’ special access to truth and their ability to deploy effective counter messaging, leading people from darkness into light. Such efforts typically represent propaganda masquerading as science. Instead of “follow the science” it is in this case ”we shall lead you to the science to follow.”

The combination of these two claims results in stealth issue advocacy — defined in The Honest Broker as seeking to advance a political agenda under the guise of science — with pathological consequences for both science and policy.

In January, the World Economic Forum warned that the greatest global threat facing the world over the next two years is “misinformation and disinformation.” One challenge for making sense of the WEF’s warning and how risks today may differ from the sorts of misinformation historically endemic to politics is that the notion of “misinformation” lacks a meaningful definition, by the WEF or by misinformation scholars.

For instance, in 2015, one group of scholars explained:

Misinformation by definition does not accurately reflect the true state of the world.

Simple, right? Once we identify “truth” then we will know that everything else is misinformation. But how do we know what is actually true? And who is “we,” anyway?

A 2023 survey of misinformation researchers offered little further help in defining misinformation, explaining,

the most popular definition of misinformation was “false and misleading information”

That definition is about as useful as defining research misconduct as improper conduct in research — circular and empty.

Some have suggested that we can use expert consensus views to delineate truth from misinformation, but this too is deeply problematic and leads to challenging questions such as:

Who counts as an expert?

What is the definition of a consensus?

What happens when experts disagree?

When a consensus evolves, does heretofore truth become misinformation, and do yesterday’s truth tellers become today’s misinformers?

How can a consensus actually evolve if it is used as a tool to delineate truth from misinformation?

What happens when consensus processes become captured or politicized?

What if a consensus is wrong?

Consider a highly visible debate of the past few days over climate science. One position, expressed by climate scientist James Hansen, holds that the views of the Intergovernmental Panel on Climate Change (IPCC) on the pace of ongoing climate change are deeply flawed and subject to the myopia of its authors — Hansen believes that climate change is proceeding much faster than projected by the IPCC.

Does Hansen’s stance — explicitly contrary to the IPCC consensus reports — make him a peddler of misinformation?

An opposing view, expressed by climate scientist Michael E. Mann, is that Hansen’s views are “absolutely absurd” and the IPCC is correct in its views on the pace of evolving climate change.3

Hansen is the doyen of climate science and activism, so does that make Mann’s divergent views misinformation?

Who holds the truth here? Who is engaged in misinformation?

The answer to both questions is — Neither!

Important scientific questions with relevance to policy and politics are often contested, with legitimate views expressed that are in opposition or collectively inconsistent. Post-normal science is in fact perfectly normal.

Contestation of truth claims among experts does not mean that we know nothing or that anyone’s version of reality is just as good as anyone else’s version. It does mean that truth often sits in abeyance, meaning that there are typically and simultaneously multiple valid truth claims — contested certainties as Steve Rayner used to call them. Such excess of objectivity unavoidably provides fertile ground for cherry-picking and building a case for vastly different perspectives and policy perspectives.4

Our collective inability to achieve omniscience is one important reason why the management of experts and expert knowledge in the context of democratic governance is so very important for the sustainability of both science and democracy.5

Of course, the Earth is round, the vast majority of vaccines are safe and effective, and climate change is real. In political debates, opponents on competing sides of an issues often invoke these sorts of tame issues to suggest that the much larger class of wicked problems are just as simple, and can be handed the same way. This is how the notions of science and consensus are often weaponized to stifle debate and discussion under the guise of protecting ordinary people from “misinformation” — but dealing with climate change is not at all like responding to an approaching tornado.

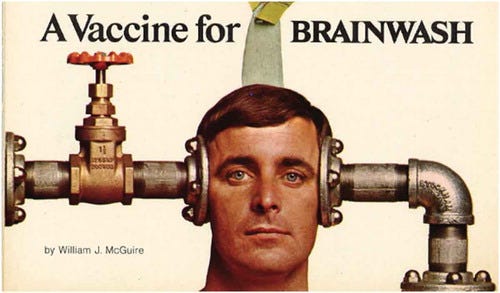

Misinformation experts analogize misinformation to a disease — a removable stone in the head. They do not prescribe drilling a hole in the skull to remove the idiocy, however, they do assert that they are akin to medical doctors who can “inoculate” the misguided and susceptible against misinformation, giving them occasional “boosters” and with the goal of creating “herd immunity.”

The self-serving nature of such arguments is hard to miss, and it is easy to see why governments, social media platforms, major news media, and advocacy groups might find such arguments appealing in service of their own interests.6

For instance, Lewandowsky and van der Linden (2021) explain their theory as follows:

Just as vaccines are weakened versions of a pathogen that trigger the production of antibodies when they enter the body to help confer immunity against future infection, inoculation theory postulates that the same can be achieved with information: by preemptively exposing people to a sufficiently weakened version of a persuasive attack, a cognitive-motivational process is triggered that is analogous to the production of “mental antibodies”, rendering the individual more immune to persuasion.

It is of course well established that authorities can manipulate public perceptions and beliefs. Almost a century ago, political scientist Harold Lasswell in his PhD dissertation defined propaganda as “the management of collective attitudes by the manipulation of significant symbols.” Propaganda has a pejorative connotation, but under Lasswell’s definition everyone engaged in political discourse is engaged in propaganda.7 You can’t swim without getting wet.

Can misinformation experts influence the opinions and expressed views of study subjects in experimental settings? Of course they can.

Can experts manipulate opinions outside such settings? It is more difficult, but of course we can. “Inoculation” is a word that is more inscrutable and palatable than propaganda, but it means the same thing — the management of collective attitudes.

Contrary to the views of many misinformation scholars, there is of course absolutely no problem with experts calling things like they see them.8 For instance, climate scientists James Hansen and Michael Mann have every right to express their divergent opinions — whether they are popular or unpopular, in the fat or thin part of a scientific consensus, or perceived as supporting this or that political agenda. Similarly, there is considerable value in teaching people critical thinking skills, fallacies of logic and reasoning, and how to avoid being fooled or exploited.

Problems arise when experts collaborate with authoritative institutions to enforce a party line perspective on views that are deemed acceptable (and unacceptable) to express and that party line is then used to (try to) manage public opinion and expert discourse. Party lines can be enforced by institutions of the media, of government, and of course of science. An obvious recent example has been the ongoing effort to manage discourse over the possibility that COVID-19 resulted from a research-related incident. That effort has failed, but we are still dealing with the consequences.

Party line perspectives need not be the result of explicit decisions, but rather can result from like-mindedness and groupthink. Consider the self-reported political leaning of more than 150 misinformation researchers, as shown below. Can it be surprising that the “inoculations” (propaganda) applied by these scholars to wrong-thinking citizens have a decidedly leftward tilt?

The past several decades has seen the pathological politicization of science across several important fields, climate and public health among them. Much of this is of course due to the fact that these issues are deeply politically contested with partisan combatants on the left and right (who are otherwise at odds on policy) in strong agreement that they can achieve political gains through polarization of these issues.

While politicians and advocates bear their share of responsibility, make no mistake, we in the expert community have also played our part in pathological politicization by becoming partisans as well — especially authoritative scientific institutions and their leaders. Misinformation research and its role in seeking to enforce party line perspectives are but one example of how experts have chosen to fuel the flames of polarization.

Fortunately for both science and democracy, there are much better alternatives than seeking to remove the “stone of madness.”

❤️Click the heart to support open expression and debate over trephination!

Thanks for reading! These ideas are work in progress, so I will appreciate your engagement, challenges, questions, counter-arguments, and stress testing. What lines of argument need more development? What is as clear as mud? Thank you, and remember, honest brokering is a group effort.

In personal news, my final spring break as a professor at the University of Colorado Boulder was wonderful, spent with my family. I’ll have to try to maintain the practice of a “spring break” going forward — it is good for the mind and soul.

THB is reader supported, and I welcome your support at any level that may make sense, as it is your support that makes possible the independent research and analyses of THB.

Self-appointed “fact checkers” at times also make such a claim.

Science is the shortcut.

Mann has me blocked on X/Twitter, so please head over there to see his Post/Tweet that I’m referring to here.

The notion of “excess of objectivity” comes from Dan Sarewitz and is explored in his brilliant 2004 paper on how science makes environmental controversies worse.

Here, by “expert” I do not simply mean credentialed or academic experts, but rather the full range of expertise that may be relevant to understandings.

Michael Shellenberger, who I’ve known for a long time, argues that there is a “Censorship Industrial Complex,” which is a different argument than I am make here today, although there are some overlapping areas of agreement, which I’ll develop in future posts.

The only effective and democratically legitimate way to counter propaganda is with better propaganda.

Governments have discovered that "governing" misinformation and disinformation is far easier if government is the source of the misinformation or disinformation.

However, we did recently "dodge" establishment of a federal "Disinformation Governance Board", which bore a startling resemblance to Orwell's "Ministry of Truth".

An excellent piece and I look forward to the next ones.

I was left wondering to what extent the problem is a misconception in the political arena that science is an institution, when it is actually (or should be) a process, in which scientists test each other’s hypotheses looking for flaws.

Instead of asking which experts we should rely on, we should first ask whether there is a healthy scientific process underway. In any field where disagreement is being stifled by the excommunication of heretics, there is not a healthy scientific process and we are seeing politics not science.