Long-time readers of THB will know well that I am strongly supportive of formal scientific assessments — a form of science arbitration, as defined in my book which gives this site its name. Scientific assessments are essential for understanding what relevant experts collectively think they know, what they think they don’t, along with surfacing uncertainties, disagreements, and areas of ignorance.

Assessments, when done well, are not truth machines, but offer a provisional snapshot in time that can inform decision makers and the broader public on the state of current scientific understandings.

On climate change, the Intergovernmental Panel on Climate Change (IPCC) has performed scientific assessments of climate change (six so far) since 1988. I have been a strong supporter of the IPCC, testifying before the U.S. Congress that, “if it did not exist, would have to be invented.”

That said, the most recent IPCC assessment offered multiple troubling indications that its quality may be slipping — with key parts of the report being unreliable, placing its legitimacy at risk. In a nutshell, based on my observations as a long-time IPCC outsider1 — the IPCC’s Working Group 1 on physical science has overall played things straight in my areas of expertise, but some cracks are showing. IPCC Working Group 2, on impacts and vulnerability, is deeply politicized and unreliable.2 Its Working Group 3 on mitigation has been largely captured by a narrow academic community, focused on integrated assessment modeling.

In today’s post I highlight some of the many issues with IPCC quality control that I have observed in its Sixth Assessment reports (AR6). The factors underlying issues such as these need to be addressed as the IPCC ramps up its seventh assessment cycle. Political and public legitimacy is difficult to earn and easy to lose. The IPCC is too important to become just another politicized institution of climate politics.

IPCC assessments are expected to cover the “range” of views in the literature and to be “balanced”:

In Assessment Reports, Synthesis Reports, and Special Reports, Coordinating Lead Authors (CLAs), Lead Authors (LAs), and Review Editors (REs) of chapter teams are required to consider the range of scientific, technical and socio-economic views, expressed in balanced assessments.

The expectation that the IPCC assessments are to be “comprehensive” was reinforced in the 2010 IAC review of the IPCC’s policies and procedures:

IPCC assessment reports are intended to provide a comprehensive, objective analysis of the available literature on the nature and causes of climate change, its potential environmental and socioeconomic impacts, and possible response options.

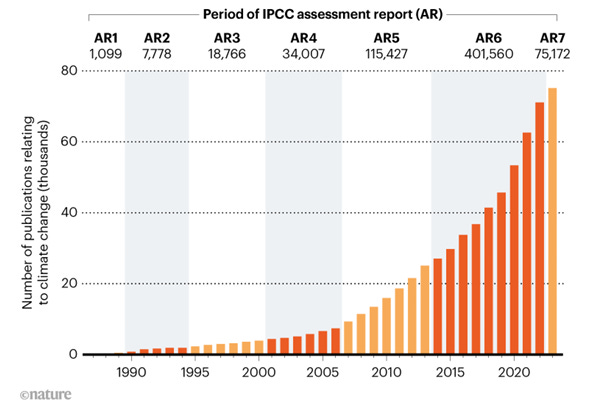

A major challenge facing the IPCC is the exponential growth of scientific literature on climate change. The figure below shows that the IPCC identified more than 400,000 publications related to climate change that were published during the AR6 assessment cycle.

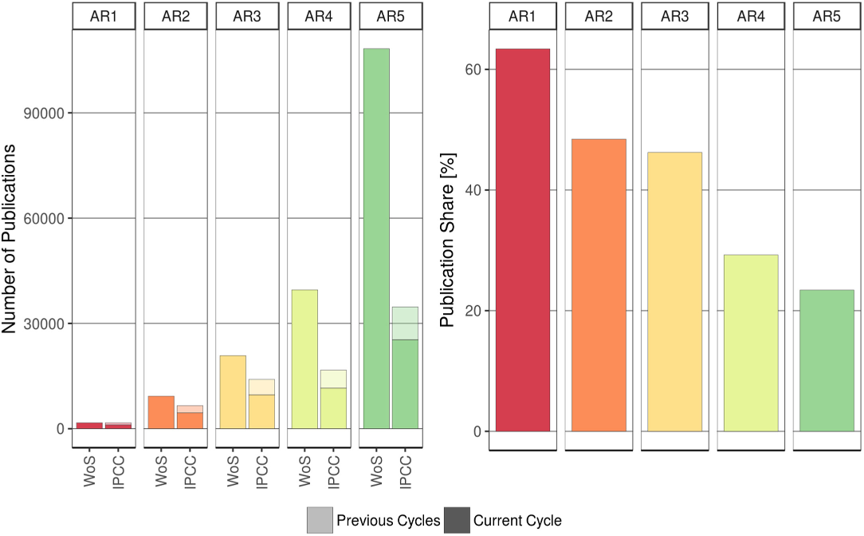

The IPCC recently acknowledged the challenge posed by the enormous and growing literature: “The volume of publications relevant to climate change has been growing exponentially, roughly doubling in volume with each IPCC cycle. . .This poses ever increasing challenges for author teams.” One consequence of the burgeoning literature is that less-and-less of the literature has been cited in each assessment.

The figure above shows that successive IPCC reports have cited a smaller proportion of the literature that the organization is charged with assessing comprehensively. Does that imply that there is too much climate science? That there are large areas of redundancies? Are their huge gaps in the assessed literature? Does the subset cited reflect the much larger proportion that is not cited?

The declining proportion of the relevant literature assessed by the IPCC is problematic, according to Minx et al. 2017, because it opens the door to bias in assessment:

[A]t least 80% of the most recent scientific literature could not be directly reviewed by IPCC authors in AR5 and was thus not included in the synthesis of scientific knowledge. Taken together, the sheer size of the current body of literature and the much higher share of publication coverage in earlier IPCC assessments suggest that reliance on expert judgement in selecting which of the literature to assess has become increasingly pronounced over time. The bias introduced by this expert selection and therefore the impact of ‘big literature’ on the assessment outcomes remains unclear and a discussion of procedural options to deal with it has so far been notably absent.

The main reason why the IPCC is expected to be comprehensive in its assessments is to avoid bias that might be introduced — intentionally or unintentionally — through selective citation of a small subset of the literature that may or may not reflect the broader literature.

One particularly egregious example of a selective citation bias is related to my research. On hurricanes, the IPCC AR6 WG1 stated (emphasis added):3

A subset of the best-track data corresponding to hurricanes that have directly impacted the USA since 1900 is considered to be reliable, and shows no trend in the frequency of USA landfall events (Knutson et al., 2019). However, an increasing trend in normalized USA hurricane damage, which accounts for temporal changes in exposed wealth (Grinsted et al., 2019), and a decreasing trend in TC translation speed over the USA (Kossin, 2019) have also been identified in this period.

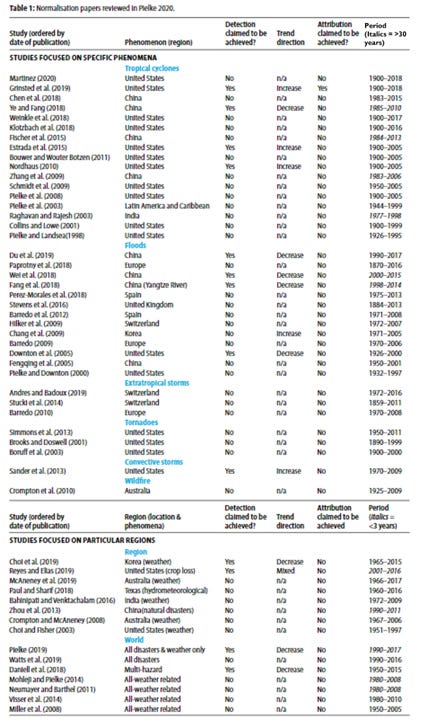

The citation to Grinsted at al. 2019 is meant to suggest that the NOAA Best-Track data on hurricane may be unreliable. This claim is not just highly misleading (don’t use economic data to evaluate climate data — duh) but it is also a notable outlier in the literature. Here, the IPCC failed to cite eight other studies with results contrary to Grinsted et al. 2019 — Martinez 2020, Weinkle et al. 2018, Klotzbach et al. 2018, Bouwer and Wouter Bozen 2011, Schmidt et al. 2009, Pielke et al. 2008, Collins and Lowe 2001 and Pielke and Landsea 1998.4

Was the IPCC ignorant of this literature or was it intentionally trying to present a biased view? Neither option is a good one.

The IPCC AR6 WG2 was similarly highly selective in its citation of literature on U.S. normalized hurricane losses (emphasis added):5

Studies of US hurricanes since 1900 have found increasing economic losses that are consistent with an influence from climate change (Estrada et al., 2015; Grinsted et al., 2019), although another study found no increase (Weinkle et al., 2018).

In this case, Estrada et al. 2015 make no claims of attribution — so it is miscited — and Weinkle et al. 2018 is one of nine such studies in the literature that found no increase in losses after normalization, consistent with the historical record of U.S. hurricane landfalls.

Again, was the IPCC ignorant or deceptive?

More generally, the IPCC ignored more than fifty other studies that normalized disaster losses, none of which — except Grinsted et al. 2019, cited multiple times by IPCC — claimed to have attributed normalized disaster losses to changes in climate. These studies are listed in the table below. The bias here is undeniable.

The IPCC has long faced challenges calling things straight on disasters and climate change, something I documented in detail in The Climate Fix, and discuss in my podcast below.

Fifteen years ago, quality control issues in the IPCC Fourth Assessment motivated an external review by the Interacademy Council, which recommended a range of actions to improve assessment integrity. One key problem was the frequent citation of news stories and blog posts in the AR4, particularly in its Working Group 2.6

Today, the IPCC is very clear that:

In general, newspapers and magazines are not valid sources of scientific information. Blogs, social networking sites, and broadcast media are not acceptable sources of information for IPCC Reports.

However, IPCC AR6 Working Group 3 prominently referenced a blog post to justify the continued use of the extreme, implausible RCP8.5 scenario.7

Climate projections of RCP8.5 can also result from strong feedbacks of climate change on (natural) emission sources and high climate sensitivity (AR6 WGI Chapter 7), and therefore their median climate impacts might also materialise while following a lower emission path (e.g., Hausfather and Betts 2020).

Hausfather and Betts (2020) is uncited in the report — itself a notable oversight, but which would have revealed it to be a blog post. However, the citation clearly refers to a 2020 blog post at Carbon Brief, which sought to defend the continued prioritization of RCP8.5.

The IPCC’s discussion of high-end emissions scenarios — in its Box 3.3 — cites an outlier defense of RCP8.5 (Schwalm et al. 2020) while ignoring the important work of Justin Ritchie, providing another example of selective citation that distorts the interpretation of the broader literature.

Another source of bias is the self-citation of papers by IPCC authors. Consider IPCC WG1 Chapter 11 Section 11.7.1 on tropical cyclones (which includes hurricanes). That subsection of Chapter 11 includes 379 non-unique references to the published literature. Of these citations, 70 (~18%) are to papers authored by just 5 of Chapter 11 authors. One set of these citations introduces a major error into the IPCC report, which made its way into the IPCC Synthesis Report.

Compare these numbers to almost 20,000 papers published on “tropical cyclones” and “climate change” from 2013 to 2020, according to Google Scholar — the period of the IPCC AR6 assessment.

IPCC Working Group 3 has a massive self-citation problem — a tally of self-citations by 5 prominent integrated assessment modelers who are also WG3 authors shows well over 500 citations to their own work in the WG3 report. IPCC WG3 needs to diversify its intellectual scope, which has been captured by a tiny academic community for decades.

The examples here indicate that one really needs to be an expert to be able to identify issues with assessment. For almost everyone else, the integrity of assessment is a matter of trust in the IPCC as an institution. With problems like the ones I’ve documented, the IPCC is playing a risky game with its own legitimacy.

Fortunately, some in the IPCC appear to be aware of these issues — The IPCC has proposed a workshop in October, 2025 to consider approaches to assessment that might improve its ability to accurately assess the literature and to avoid biases. The IPCC says that it will consider tools of artificial intelligence and formal systematic reviews. Any IPCC insider participating in this effort is invited to contribute a guest post here at THB (as well as anyone who’d like to respond to this post.)

The IPCC sits at a fork in the road — it can take steps to improve the quality of its assessments, or, it can continue to serve as a club, producing biased interpretations of the literature and promoting the careers of its authors. The choice between the two would not seem difficult.

Comments welcomed!

The easiest thing you can do to support THB is to click that “♡ Like”. More likes mean that the algorithm makes this post rise in Substack feeds and then THB gets in front of more readers!

THB is reader-engaged and reader-supported. THB’s aim is to highlight data, analyses and commentary missing from public discussions of science, policy and politics — like what you just read above. A subscription costs ~$1.50 per week and keeps THB running so I can deliver posts like this to your in box several times a week. If you value THB and are able, please do support!

I’m one of a small group of researchers whose peer-reviewed work is cited by each of the IPCC’s three working groups. More than 15 years ago I was nominated by the U.S. government to serve as an author on the IPCC Special Report on Extreme Events (SREX). I was told at the time by a high-ranking IPCC official that because of the conclusions of my (and colleagues) research on extreme events I would never be invited to participate in IPCC, despite my knowledge of the literature. That judgment proved correct.

IPCC AR6 WG1, pp. 1585-1586.

Bouwer, L. M., & Wouter Botzen, W. J. (2011). How sensitive are US hurricane damages to climate? Comment on a paper by WD Nordhaus. Climate Change Economics, 2(01), 1–7.

Collins, D., & Lowe, S. P. (2001). A macro validation dataset for US hurricane models. Casualty Actuarial Society.

Grinsted, A., Ditlevsen, P., & Christensen, J. H. (2019). Normalized US hurricane damage estimates using area of total destruction, 1900− 2018. Proceedings of the National Academy of Sciences, 116(48), 23942-23946.

Klotzbach, P. J., Bowen, S. G., Pielke, R., & Bell, M. (2018). Continental US hurricane landfall frequency and associated damage: Observations and future risks. Bulletin of the American Meteorological Society, 99(7), 1359–1376.

Martinez, A. (2020). Improving normalized hurricane damages. Nature Sustainability, 3, 517–518.

Pielke, R. A., Gratz, J., Landsea, C. W., Collins, D., Saunders, M. A., & Musulin, R. (2008). Normalized hurricane damage in the United States: 1900–2005. Natural Hazards Review, 9(1), 29–42.

Pielke, R. A., & Landsea, C. W. (1998). Normalized hurricane damages in the United States: 1925–95. Weather and Forecasting, 13(3), 621–631.

Schmidt, S., Kemfert, C., & Höppe, P. (2009). Tropical cyclone losses in the USA and the impact of climate change – a trend analysis based on data from a new approach to adjusting storm losses. Environmental Impact Assessment Review, 29(6), 359–369.

Weinkle, J., Landsea, C., Collins, D., Musulin, R., Crompton, R. P., Klotzbach, P. J., & Pielke, R. (2018). Normalized hurricane damage in the continental United States 1900–2017. Nature Sustainability, 1(12), 808

IPCC AR6 WG2, p. 1978

Long-time observers may recall the IPCC’s 2035 glacier error that helped to motivate the 2010 IAC review.

IPCC AR6 WG3, p. 317

The underlying question that is rarely asked is: how long is it possible for a scientific organization located within a political organization, like the United Nations to remain scientific if the science doesn't coincide with the political agenda? I think that question is beginning to answer itself.

The main problem I see with the IPCC (and mainstream climate science) is that it's fixated on carbon and blind to land change. For instance, in explaining how global models missed the 2023 heat anomaly, Gavin Schmidt of NASA points to reduced low-cloud cover, but only as a greenhouse feedback happening over the oceans. The role of land degradation in diminishing low cloud cover on land is left out. Until the IPCC takes seriously the link between land and climate, not simply in terms of carbon but also in terms of water, it is only looking at a portion of the picture. Maybe it needs a couple more C's in its name" Intergovernmental Panel on Carbon Caused Climate Change.

By the way, there was an organization focused on land change effects, the IGBP (International Geosphere Biosphere Program) which was formed about the same time as the IPCC, but largely ignored and shuttered in 2015.

The reforms needed include a fundamental shift in vision to include living processes, water cycles and the regulatory functions of the biosphere, IMO.