Apples, Oranges, and Normalized Hurricane Damage

An incredible data error in a paper celebrated by the IPCC offers a science integrity test for climate science

A “normalization” of past disaster losses seeks to estimate what amount of damage extreme events of the past would cause under today’s societal conditions. Long-time THB readers will know that the concept of and first methodology for normalization was developed more than 25 years ago by me and Chris Landsea, of the National Hurricane Center, and applied to U.S. landfalling hurricanes.

Since that time, more than 70 peer-reviewed papers have been published building on our methods and seeking to normalize damage time series in regions and countries around the world and for a wide range of phenomena — including floods, tornadoes, extratropical cyclones, convective storms, wildfire and earthquakes.

With respect to normalized U.S. hurricane losses, both the Intergovernmental Panel on Climate Change (IPCC) AR6 WG and the Sixth U.S. National Climate Assessment (USNCA) have chosen to highlight just one normalization study from this growing literature — Grinsted et al. 2018, which I’ll just call G18.1

Contrary to every other normalization study, of U.S. hurricanes or otherwise, G18 conclude that even after normalizing historical hurricane loss data for changes in population and wealth, there remains an underlying increasing trend in losses which can be attributed to human-caused climate change.

I can report today — in jaw-dropping fashion — that the increasing trend reported in G18 and promoted by IPCC and the USNCA is not the result of human-caused climate change, but rather, it is the result of a human-caused data error that is obvious and undeniable. I have contacted PNAS to request a retraction and reached out to Dr. Grinsted.

Grab a cup of coffee and read on — This error is obvious and fatal to the research. It requires immediate action by PNAS, and corrections by the IPCC and USNCA.

Let’s start with the basics of all normalization studies: Any normalization of a historical loss time series necessarily starts with a historical loss time series. For total direct damages from hurricanes, there is only one such historical loss time series compiled with consistent methods dating to 1900.

How do I know this? Well, my colleagues and I created this time series over the past 30+ years, based on original research and sources, and carefully documented in the peer-reviewed literature.

The time series is based on annual reports published in the Monthly Weather Review and then later from estimates of the U.S. National Hurricane Center. The entire time series dating to 1900 is based on a consistent methodology that produces apples-to-apples results, with ongoing research to keep the series consistent and up-to date.

Below are the peer-reviewed papers which describe methods and identify the original data sources — as the dataset grew from 41 years in length to 118 years — making long-term U.S. hurricane normalization possible.

Landsea 1991: 1949-1989

Pielke and Landsea 1998: 1925-1995

Pielke et al. 2008: 1900-2005

Weinkle et al. 2018: 1900-2017

This is where things get interesting.

G18 reports that instead of using the base damage that Pielke et al. 2008 compiled and published in the peer-reviewed literature, they chose instead to use a non-peer-reviewed dataset that they found online from an insurance company called ICAT. G18 explain this in the excerpt from their paper below (where Pielke et al. is the same Pielke et al. 2008 linked immediately above).2

Let me tell you about the “ICAT dataset.”

The second author of Pielke et al. 2008 was my brilliant former student Joel Gratz. After graduating with a M.S. from the University of Colorado Boulder in 2005, Joel took a job with a Boulder-based insurance company called ICAT.3 Yes, that is the same ‘ICAT” as referenced by G18.

At ICAT, one of the projects that Joel took on, with my collaboration, was to create an online tool that would display currently active tropical cyclones alongside their historical analogues, and display the historical normalized damage of those analogues — Very cool!4

The original base damage data used by Joel to create the ICAT online tool was that published in Pielke et al. 2008, as we wanted to ensure that everything ICAT used was based on peer-reviewed research. Thus, the ICAT online tool was explicitly designed to be based on Pielke et al. 2008 meaning that originally there was no such thing as an “ICAT database.”

in 2010, Joel moved on from ICAT to bigger and better things. The ICAT online tool continued on for several more years and its stewards chose to extend the data beyond 2005, to replace data in Pielke et al. 2008, and to invent other historical loss estimates — choices which which were not documented, justified or peer reviewed.5

The resulting “ICAT dataset” was used primarily for marketing purposes and evolved from being based on peer reviewed science to something quite different. The “ICAT database” was thus never appropriate for research.

By the time that G18 chose to download the “ICAT database” it had been changed from Pielke et al. 2008 in many ways — all untraceable.

After a bit of sleuthing (and it did not take much) I can show you that the main results of G18 — of an increasing trend in normalized hurricane losses — is entirely the consequence of the improper changes made by ICAT to the original peer-reviewed dataset of base losses, and not anything to do with hurricanes.

Let’s look at some numbers.

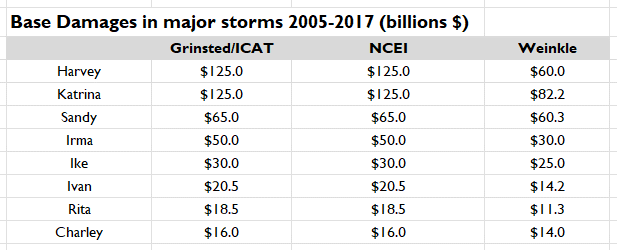

Weinkle et al. 2018 extended the Pielke et al. 2008 dataset to 2017, using the same base damages. The table below shows how G18/ICAT compares with Weinkle et al. 2018 for base damages of major storms early in the last century — You can see that the base damage loss estimates are identical.

Now let’s take a look at how G18/ICAT compares with Weinkle et al. 2018 for base damages of major storms of the early 21st century — You can see that they are dramatically different, with G18 values much larger.

Why are the values of G18/ICAT the same as Weinkle et al. 2018 long ago, but much larger in recent decades?

The answer: NOAA’s Billion Dollar Disasters.

As I’ve documented, in the late 1990s NOAA started tabulating a time series of “billion dollar disasters.” Unlike the base damage time series of U.S. hurricanes since 1900 which included only direct economic damages,6 the NOAA “billion dollar disaster” time series also compiles many types of indirect damages, such as crop losses, business interruption, federal disaster assistance, inland flooding, and various undefined “data transformations.” The “billion dollar disaster” database is created and maintained by a group within NOAA called the National Centers for Environmental Information or NCEI.

It is undeniable that after Joel Gratz left ICAT in 2010, ICAT decided to replace and extend the base damages of Pielke et al. 2008 with those from the NOAA/NCEI “billion dollar disaster” dataset. This was methodologically inappropriate as it stapled a short time series of oranges onto a long time series of apples.

How do we know this? Again, the numbers tell the story — Let’s again look at the dataset used by G18 in their study. In the table below, “NCEI” refers to the NOAA “billion dollar disaster” dataset, as reported by G18.

There can be no doubt that G18 used in research a dataset (“ICAT”) that they found online for which they had no idea of its provenance. How can we be sure that they did not know where the ICAT dataset came from?

The answer is provided by G18, where they assert unknowingly that their test of robustness of their analysis based on the “ICAT dataset” is to apply the analysis to the two datasets that are the mash-up of the “ICAT dataset.” One would never test the robustness of an analysis in this manner, much less announce it in a paper.7

So how do we know that the data input to G18 makes all the difference to their top-line conclusion?

The answer is that G18 report in their Supplementary Information the results of the application of their methodology for normalization to the dataset of Weinkle at al. Their results show that when they use the correct base damage time series, the results of G18 fall in line with the broader consensus of the literature of the past 25 years — G18 indicates no trend in normalized hurricane losses 1900 to 2017.8 The headline results that have been cherrypicked by IPCC and the USNCA disappear when the erroneously spliced base damages dataset is corrected.

This is one of those rare cases where a fatal error in research is obvious, consequential and simple for anyone to document for themselves. I have requested of PNAS that G18 be retracted, and corrections from IPCC and the UCNCA must occur as well. This is a simple but important test of scientific integrity for climate science. Let’s see what happens.

❤️Click the heart if you think that climate science should uphold standards of scientific integrity

Thanks for reading! THB is not just reader supported, I now work for you. If you are a free subscriber, please consider upgrading to a paid subscription. If you are a paid subscriber, thank you for your support!

Grinsted, A., Ditlevsen, P., & Christensen, J. H. (2019). Normalized US hurricane damage estimates using area of total destruction, 1900− 2018. Proceedings of the National Academy of Sciences, 116(48), 23942-23946.

This disclosure of data source should have been immediately fatal in peer review.

Joel has since gone on to lead a very successful weather forecasting company called OpenSnow. If you are a skier or just like weather, check it out!

If you are re/insurance and would like to recreate such a tool using appropriate data, please be in touch..

ICAT never maintained that the dataset modified from Pielke et al. 2008 was to be used for research purposes. It was at that point just a marketing tool.

Direct damages primarily includes property damage.

To be clear, I am making no allegation of research misconduct here, but it is clear that the authors of G18 were sloppy, uniformed, and incurious — Keystone Cops would be too kind. They could have just asked me and this mistake could have been avoided. Ostracism of experts who created the data you are seeking to use has consequences.

This is confirmed in G18 Table S1 where they report that the application of G18 methods to the base data of Weinkle et al. 2018 results in no meaningful trend from 1900 to 2017.

PNAS just reminded me that I submitted a letter on this in 2019. In the submitted letter I wrote:

"the paper uses an online dataset from ICAT that has appended data from the recently developed NOAA NCEI “billion dollar disaster” dataset to a version of Pielke et al. 2008 (2, P08). This hybrid dataset introduces a major discontinuity in loss estimates, starting in 1980 when the NCEI dataset begins. NCEI and P08 employ dramatically different loss estimation techniques for bass data loss estimates. Using data included with G19 to calculate the effects, this bias averages ~33% for storms post-1980 versus those pre-1940. Thus, under no circumstances should the current version of the ICAT dataset be used in research."

I received three reviews back of my letter, and on this point they said:

Reviewer 1: "We also observe that the most damaging storms have the largest increases in frequency (Fig S3c,d). This is consistent with the results from the analysis of the longer records from ICAT and Weinkle et al. (1,4)"

Not relevant

Reviewer 2: "the NCEI dataset 1980 onward is going to be MUCH more accurate for economic effects than either Pielke 2008, ICAT or Weinkle because it doesn't just look at wind or insured loss, it tries to estimate all economic losses. However, it is harder to compare with the older figures."

Yes, that's why you can't splice it!

Reviewer 3: "The author claims that the current version of ICAT should never be used in research. I find two things lacking in this critique: 1) the author provides no reference for this critique of the current ICAT dataset and 2) ignores that G19 include a robustness analysis based on two other datasets (Weinkle et al., 2018; NCEI, 2019)."

This reviewer refuses to believe that ICAT = Weinkle + NCEI, and that I helped to create ICAT. (Screams into the void!)

I did not resubmit the letter, as PNAS limits letters to 500 words and I suppose that I saw no point in arguing about the unreality of the "ICAT database."

At the time, I did not quantitatively explore the consequences for G19 results of using the mashed-up datasets. I should have. Because if I had done so, I would have called for retraction then rather than just writing a letter.

None of this matters. The fact is that G19 uses a dataset they found online, which is flawed and determines their results. That dataset does not actually exist. On that basis alone, G19 should be retracted.

"Ostracism of experts who created the data you are seeking to use has consequences."

First question I would ask of G18 is, how could you ignore the different conclusions. How could they not ask themselves, why are we different from everyone else? All other sets show no trend, yours does? Why is that? That's a grad student, rookie mistake. I would then ask the same question of the original reviewers of the PNAS paper. Yes, it is very sloppy work from the grad student, but inexcusable from the reviewers.

Second question I would ask is about the response letter from PNAS. Your reviewer no. 2 states "the NCEI dataset 1980 onward is going to be MUCH more accurate for economic effects..." Do you agree with that conclusion? I certainly don't. It's indirect, meaning it can't be measured accurately, only subjectively. Therefore, you cannot conclude your result is more accurate. It is different, that's all.

I think you'll get a response from Grinsted et al WAY before you get any acknowledgement of error from PNAS. Please keep us posted.